Introduction

So, once I had heard about Rump kernels [1] from

Gedare Bloom (one of RTEMS maintainers), I started to do some research about it, and whether RTEMS can have a support for such a new architecture. Rump kernel is a way to run

unmodified NetBSD kernel drivers virtually anywhere. So, for a platform that can support Rump kernels

, a developer can just pick up some NetBSD drivers (that have been tested and proven to work properly), compile and link it without any modifications to the source code or the host kernel itself. Moreover, these drivers can be even upgraded from NetBSD upstream without any significant effort. So, what's exactly Rump kernel, Anykernel and a so-called platform?

Rump kernel is not a fully-featured OS neither a complete virtual machine like KVM or VirtualBox. It's a minimal thin implementation that enables the host platform (see the platform section) to emulate the system calls layer that NetBSD drivers expect/call. Rump kernel is hardware-agnostic, meaning that it does not depend on specific hardware features like virtualization and cache-coherence. For example, kernel drivers need some way of allocating memory (using

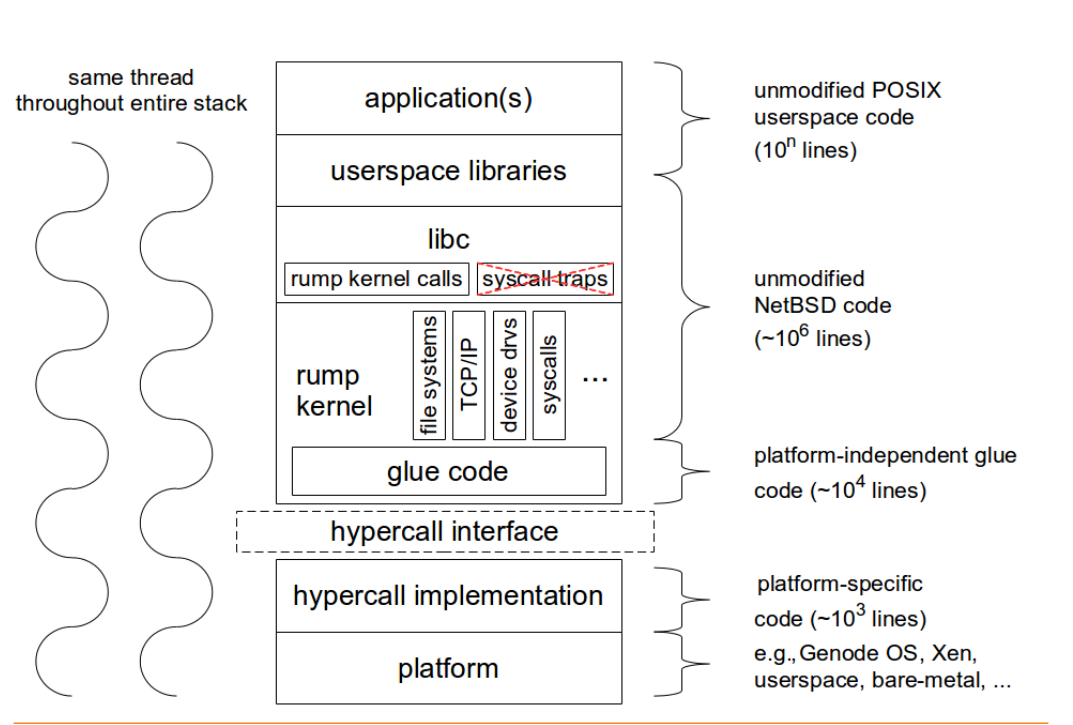

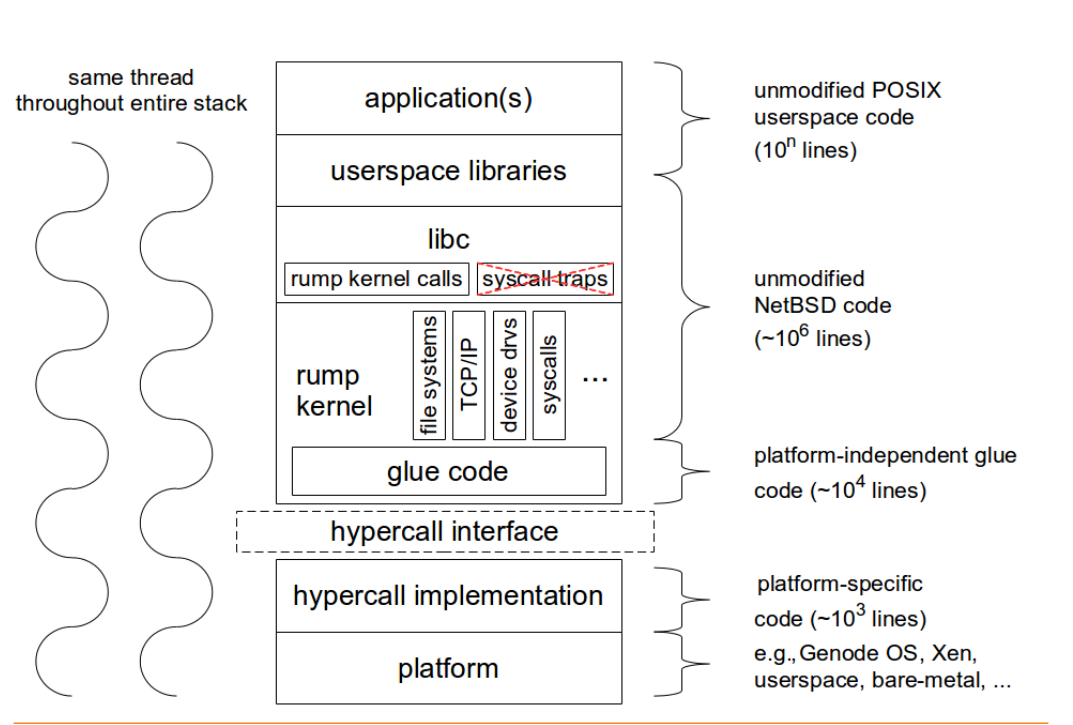

rumpuser_malloc), it doesn't really matter whether this is a virtual/logical memory (allocated address space using page-table), or fixed physical addresses; it depends on the platform, what concerns Rump kernels is to freely allocate, use and free this area of memory. That's, Rump kernels try to make use of the underlying software platform features as possible as could be in parallel with giving the illusion (and of course working work-arounds) to the drivers that they get what they need! At this point you may be wondering about the structure of Rump kernels and how it depends/relates to the platform. The following figure [2] may make it clearer. Please note that libc and the layers above it are optional.

|

| Figure 1: Rump Kernel Environment |

As you can see, the Rump kernel support is stacked. At the top of the stack comes the application that can be POSIX-compliant. In the next section some of these stack components are illustrated and how RTEMS (as an example platform) can and Rump kernels work together.

Platform

So what's the platform? Basically, the platform can be anything like a raw hardware, Virtual Machines or an OS like Linux. Actually, there are currently some implementations for such platforms. So, Rump kernels can run on some POSIX userspace like "Linux, Android, NetBSD, FreeBSD, OpenBSD, Dragonfly BSD, Solaris (+ derivates) and Windows (via Cygwin)" [3]. There are some implementations that run on bare-metal machines like KVM,VirtualBox or hypervisors like Xen. Genode OS has been modified to support Rump kernel [4] and similarly Minix. So, can RTEMS be the next platform? The simple answer is yes!

RTEMS is an POSIX-compliant RTOS, so with a small effort, Rump Kernel can run above this RTEMS/POSIX environment. However, it would make more sense from performance, control and code density perspectives to discard this POSIX dependency and write the whole hypercall layer (see figure 1). Userspace POSIX platforms here [5] have another POSIX library (userpsace libraries on the previous figure) as well as the host POSIX library. As the authors of Rump Kernels say, it's enough for a platform to just implement the hypercall layer to support the whole Rump kernel stack. So, theoretically, if RTEMS implemented this very thin ~1000-lines-of-code hypercall layer, all other NetBSD code can be imported, providing NetBSD drivers, libc, and even unmodified POSIX library.

The hypercall (AKA rumpuser) layer [7] is divided into basic and IO operations. The complete interface can be found here [6]. Almost all of the functions mentioned in that link can be implemented by using/wrapping existing RTEMS features. Some of the interfaces are mentioned below.

Memory Allocation

int rumpuser_malloc(size_t len, int alignment, void **memp)

void rumpuser_free(void *mem, size_t len)

These functions can easily be mapped to RTEMS libcsupport implementation of (malloc/free). Other memory managers like

Partition and

Region managers can also be used.

Files and IO

int rumpuser_open(const char *name, int mode, int *fdp)

int rumpuser_close(int fd)

int rumpuser_getfileinfo(const char *name, uint64_t *size, int *type)

void rumpuser_bio(int fd, int op, void *data, size_t dlen, int64_t off,

rump_biodone_fn biodone, void *donearg)

int rumpuser_iovread(int fd, struct rumpuser_iovec *ruiov, size_t iovlen,

int64_t off, size_t *retv)

int rumpuser_iovwrite(int fd, struct rumpuser_iovec *ruiov,

size_t iovlen, int64_t off, size_t *retv)

int rumpuser_syncfd(int fd, int flags, uint64_t start, uint64_t len)

The previous IO functions can be implemented by wrapping IMFS and some stubs, most embedded system applications do not need a complete featured file system, but if it's needed, the option of wrapping the correct RTEMS filesystem is still there. How to configure and enable Rump kernel features is an implementation tradoff, but currently rump-posix is doing it by starting a Rump kernel server with a command line flag of which features are needed from the Rump Kernel. For example this command line does such a job (loading a filesystem driver when starting the server)

rumpremote (unix:///tmp/rumpctrlsock)$ ./rumpdyn/bin/rump_server -lrumpvfs unix:///tmp/rumpctrlsock

Clocks

"The hypervisor should support two clocks, one for wall time and one for monotonically increasing time, the latter of which may be based on some arbitrary time (e.g. system boot time). If this is not possible, the hypervisor must make a reasonable effort to retain semantics." [6]

All of the required clock services are provided by RTEMS such as

_Watchdog_Ticks_since_boot. RTEMS provides enough time management libraries like Watchdog, Time, Clock manager, Timer benchmark (which may or may not depend on the Clock manager) and CPU Counter (deprecated?). Hence, there are more than enough implementation to support Clocks interface part of the hypercall.

Console output

"Console output is divided into two routines: a per-character one and printf-like one. The former is used e.g. by the rump kernel's internal printf routine. The latter can be used for direct debug prints e.g. very early on in the rump kernel's bootstrap or when using the in-kernel rou- tine causes too much skew in the debug print results (the hypercall runs outside of the rump kernel and therefore does not cause any locking or scheduling events inside the rump kernel)." [6]

Both are there!

Threads

int rumpuser_thread_create(void *(*fun)(void *), void *arg,

const char *thrname, int mustjoin, int priority, int cpuidx,

void **cookie)

void rumpuser_thread_exit(void)

int rumpuser_thread_join(void *cookie)

void rumpuser_curlwpop(int enum_rumplwpop, struct lwp *l)

Mainly, all thread management is directly mapped to the host threading implementation. So, when Rump kernel driver creates a thread, the host will actually create this thread and schedule it according to its policy. It does not matter how the host implements threading. For RTEMS, all of these functions can be easily mapped to corresponding ones, no big deal.

Synchronization and Mutexes

Normal mutex operations are provided by RTEMS. There is also a need for Read/Write locks and conditional variables.

What then?

If we had this hypercall layer on RTEMS, there's no other effort needed. The other BIG NetBSD code can be linked AS IS! At this stage we can try out some NetBSD drivers. We can have some fun by making use of Rump Kernel Remote/Client mode which separates the kernel from the clients (applications). So, for example we can have a bare-metal client connecting to Rump Kernels on RTEMS or vice versa communicating using IPC and TCP/IP. This platform can be setup using some simulators or real hardware.

One other interesting way is using Rump Kernels to tackle the scalability issues that RTEMS currently faces, providing another solution other than the complex fine-grained locking. We can have cores with attached IO devices that can have Rump kernels on it, and act as servers for other clients (on other cores), communicating together (using inter-processor interrupts, message passing, shared-memory communication or whatever).

References

[1] http://rumpkernel.org/

[2]

Rump Kernels No OS? No Problem!

[3]

Rump Kernels Platforms

[4]

Genode OS and Rump Kernels.

[5]

Userspace (POSIX) Rump kernel.

[6]

rumpuser - NetBSD Manual Pages

[7] https://github.com/rumpkernel/buildrump.sh/issues/59